The Skills Credibility Crisis

Every organization today is racing to become skills-first. It's no longer optional-it's strategic imperative. CHROs are launching skills taxonomies. Talent leaders are investing in learning platforms. Business units are demanding workforce agility.

But here's the uncomfortable question nobody's asking: How reliable is your skills data?

If you're like most organizations, the honest answer is: you don't really know.

When Skills Data Becomes Skills Fiction

The irony of the skills revolution is stark. We have more skills data than ever before, yet less confidence in what it actually tells us.

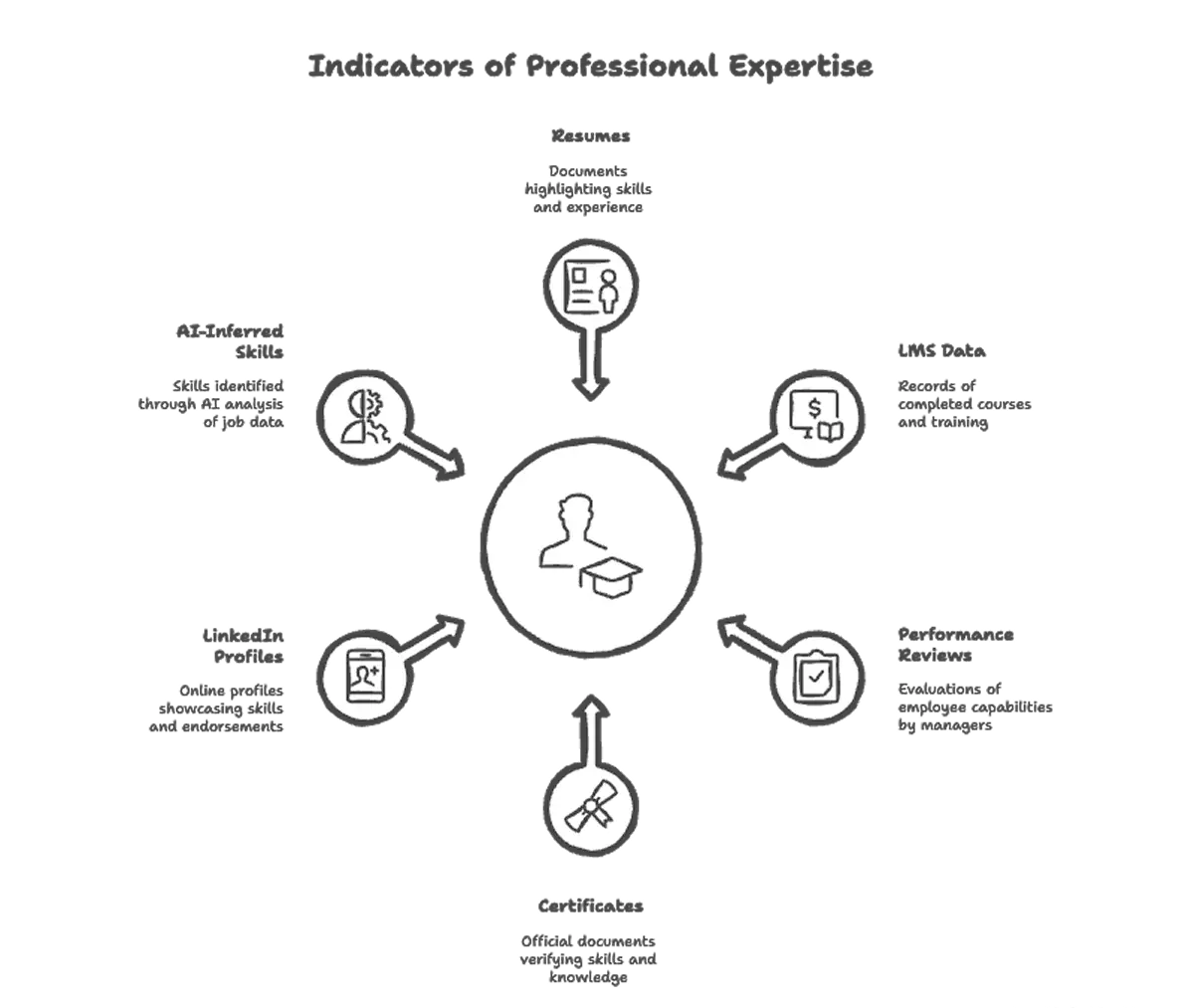

Walk into any large enterprise and you'll find an overwhelming abundance of data points:

- Resumes and job applications filled with self-proclaimed expertise

- Learning Management Systems tracking thousands of completed courses

- Performance reviews where managers attest to employee capabilities

- Certificates and credentials from vendors, bootcamps, and universities

- LinkedIn profiles showcasing skills badges and endorsements

- AI-inferred skills from parsing job descriptions and work history

The problem isn't collecting this data. The problem is trusting it.

Because when skills data lacks credibility, whether intentional or not, it becomes worse than useless-it becomes dangerous. It leads to:

- Mis-hires based on inflated capabilities

- Failed reskilling programs built on inaccurate baseline assessments

- Poor internal mobility decisions that set employees up to fail

- Misallocated L&D budgets addressing the wrong capability gaps

- Strategic blind spots where leadership thinks they have skills they don't

The questions haunting talent leaders are straightforward but critical:

- Which employees truly have validated skills?

- How much of this data is actually fresh and current?

- Where are the blind spots across different departments and roles?

- Can we confidently make talent decisions based on what our systems tell us?

If you can't answer these questions with certainty, you don't have a skills strategy. You have a skills illusion.

The Validation Gap: Why Traditional Skills Data Falls Short

Let's break down why most organizations struggle with skills credibility:

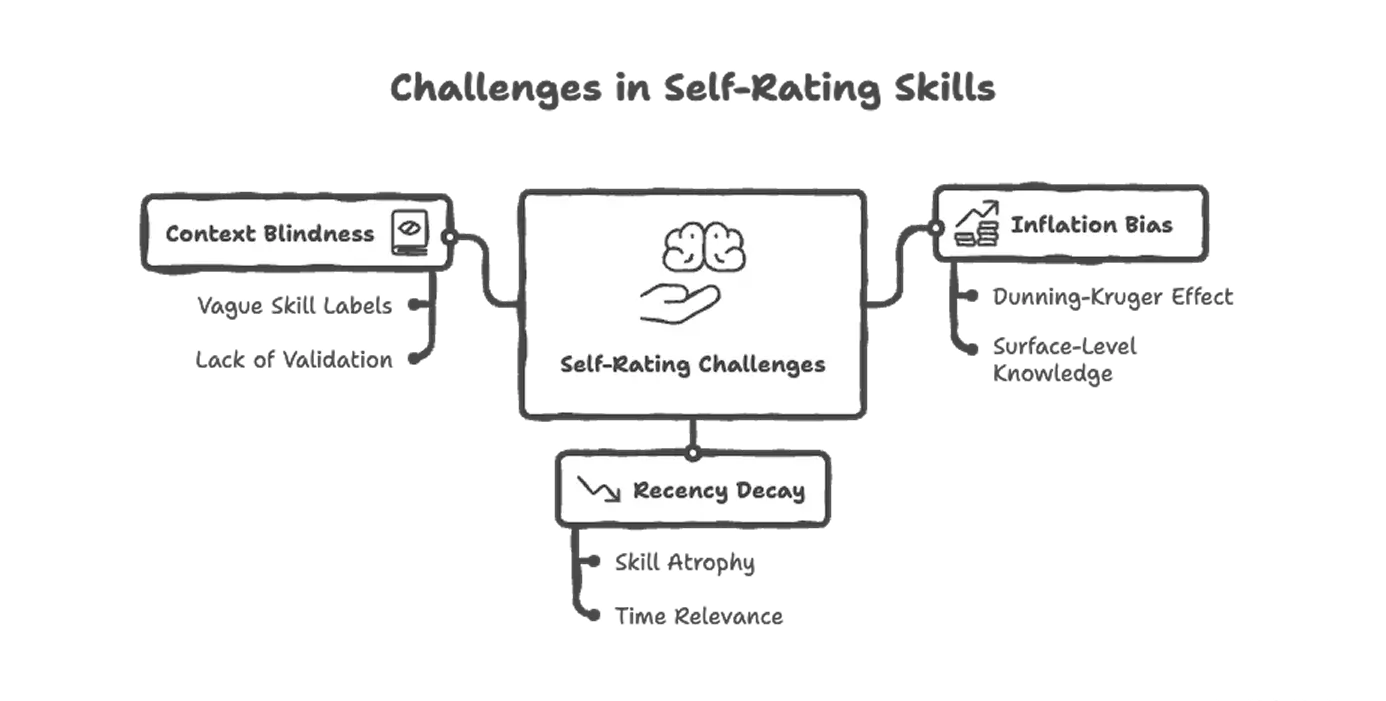

1. Self-Declaration Is Not Validation

The majority of skills data in enterprise systems comes from self-reporting. Employees add skills to their profiles. Candidates list competencies on resumes. Managers affirm capabilities during reviews.

2. Learning Completion ≠ Skill Acquisition

Your LMS shows that 500 employees completed a data analytics course. Does that mean you now have 500 data analysts?

Of course not.

Course completion tells you someone showed up. It doesn't tell you:

- Whether they retained the knowledge

- If they can apply it in real-world scenarios

- How their proficiency compares to role requirements

- Whether the skill is being actively used or has gone dormant

Yet many organizations treat learning data as if it's skills data. It's not. It's an input, not an outcome.

3. Credentials Show Participation, Not Mastery

Certificates and badges have proliferated. Some are rigorous and meaningful. Many are participation trophies.

Without understanding:

- What the credential actually tested

- How recently it was earned

- Whether the skills are being applied

- How the standard compares to your organizational needs

...credentials become noise, not signal.

4. AI-Inferred Skills Are Educated Guesses

Many modern platforms use AI to infer skills from job descriptions, work history, or project data. This seems elegant until you realize:

- Job titles are wildly inconsistent across companies

- Job descriptions often describe aspirational requirements, not actual work

- Project involvement doesn't equal skill mastery

- AI can't assess the quality of work or depth of expertise

AI-inferred skills are hypothesis generators. They're not validation.

5. Point-in-Time Snapshots Go Stale Quickly

Even when you do validate skills, that validation has a shelf life. Technical skills evolve rapidly. Business contexts change. Employees move to roles where certain skills go unused and fade.

A skills inventory that's six months old might already be 30% inaccurate. A year-old inventory is essentially historical fiction.

The Cost of Skills Illusion

When organizations make decisions based on unreliable skills data, the consequences ripple across the entire talent lifecycle:

In Hiring: You think you're assessing candidates against validated capability benchmarks, but you're actually comparing them against inflated internal profiles. You hire for "culture fit" because you can't confidently assess skill fit.

In Talent Mobility: High-potential employees get moved into roles where they lack critical skills, not because they're not capable, but because your data showed capabilities that weren't actually there. They struggle, morale suffers, and you label it a "poor fit."

In Workforce Planning: Leadership wants to know "do we have the skills to execute this strategy?" The data says yes. Six months later, you're scrambling to hire externally because the internal capabilities didn't exist.

In Learning Strategy: You're investing millions in upskilling programs designed to close gaps that your data identified. But if the baseline was wrong, you're solving the wrong problem. Learners sit through training for skills they already have or skip training for gaps they don't know exist.

In Strategic Decisions: The board wants to enter a new market or launch a new product. Your workforce analytics indicate you have the technical capabilities needed. You commit. Only then do you discover the skills weren't real.

The stakes are too high for guesswork masquerading as data.

The Breakthrough: From Skills Data to Skills Intelligence

This is where iMocha's Skill Validation Snapshot & Coverage Dashboard transforms the equation. It doesn't give you another view of the same questionable data. It gives you something fundamentally different: truth.

Not a data view. A lens of truth for your workforce skills.

How It Works: Creating Skills Confidence

The Skill Validation Snapshot & Coverage Dashboard consolidates every validation source across your organization into a single, transparent, and actionable view:

- Assessment scores from role-specific skill validations

- Certification data with context about rigor and recency

- Learning completions tied to demonstrated competency, not just attendance

- Manager validations structured around observable behaviors

- Self-assessments benchmarked against actual performance data

- Real-world work samples and project outcomes

The Power of Transparency: What Leaders Can Now See

The dashboard doesn't just show how many employees claim a skill. It shows how that validation was achieved, creating unprecedented transparency:

Validation Method Breakdown:

- 45% validated through technical assessments

- 25% confirmed via recent project work

- 20% certified with current credentials

- 10% manager-validated based on observation

This lets you immediately understand: Is this a confident data point or a soft assumption?

Coverage Heatmaps: You can visualize skill coverage across your organization with confidence levels:

- Green zones: Skills with high validation and coverage

- Yellow zones: Skills present but with validation gaps

- Red zones: Critical skill gaps or low-confidence data

No more blind spots. No more surprises.

Individual Validation Profiles: For any employee, you can see:

- Which skills are strongly validated (recent assessment, applied in work, manager-confirmed)

- Which skills are self-declared but unvalidated

- Which skills have become stale (validated >12 months ago, not recently applied)

- Skill trajectory over time-are they gaining or losing proficiency?

Seeing Progress in Motion: Skills as a Journey, Not a Snapshot

Skill validation isn't a one-time event-it's an ongoing journey. Your workforce is constantly evolving. People are learning, applying, forgetting, and relearning skills continuously.

The Monthly Momentum Section brings that journey to life through visualization:

Validation Velocity Tracking

You can see month-over-month:

- How many employees are getting skills validated (showing upskilling momentum)

- How many new skills are being added to profiles (showing organizational skill expansion)

- Whether validation rates are accelerating or stagnating (showing cultural engagement with skills validation)

This is a leading indicator of organizational learning culture. If validation rates are climbing, your people are actively engaged in growth. If they're flat or declining, it's an early warning sign.

Coverage Gap Closure

For critical skills that your organization is trying to build:

- Track how coverage is increasing month by month

- See which teams are making progress vs. falling behind

- Identify which reskilling programs are working and which aren't moving the needle

This transforms learning from a compliance checkbox into a measurable capability-building engine.

Data Freshness Trends

Is your skills data getting fresher or staler over time?

The dashboard tracks the average freshness score across your organization and shows the trend:

- Improving freshness: Your validation practices are working, and data confidence is growing

- Declining freshness: Skills data is aging, and you need to refresh validations or risk making decisions on outdated information

Individual Progress Journeys

For employees enrolled in development programs, you can visualize their skills journey:

- Skills at baseline (with confidence levels)

- Skills post-training (with validation proof)

- Skills applied in work (with project evidence)

- Skills mastery over time (showing proficiency growth curves)

This isn't just motivating for learners-it's evidence that your L&D investments are working.

From Guesswork to Confidence: Real-World Applications

Let's look at how the Skills Validation Dashboard transforms critical talent decisions:

Use Case 1: Internal Mobility with Confidence

Before: An employee applies for a senior data engineering role. Their profile shows "Python, SQL, Cloud Architecture" as skills. You move them. Three months later, they're struggling because their Python experience was limited to basic scripts five years ago, and their "cloud architecture" knowledge was theoretical.

After: The dashboard shows:

- Python: Self-declared, last validated 4 years ago, low freshness score

- SQL: Assessment-validated 2 months ago, high proficiency, high freshness score

- Cloud Architecture: Course completed, no real-world application, medium freshness score

You make an informed decision: move them into a role that leverages their strong SQL skills, and provide intensive Python and cloud upskilling with validation checkpoints before considering senior engineering roles.

Use Case 2: Strategic Workforce Planning That's Grounded in Reality

Before: Leadership asks: "Do we have the AI/ML capabilities to build this new product feature in-house?" HR pulls a report showing 50 employees with "Machine Learning" on their profiles. You greenlight an internal build. Six months later, you're behind schedule because most of those employees had only completed an intro course and couldn't architect production ML systems.

After: The dashboard shows:

- 50 employees with ML listed

- Only 8 with assessment-validated proficiency

- Only 3 with recent project application

- Only 1 with validated capability at the advanced level needed

You make the strategic call: hire 2-3 external ML engineers to anchor the team, upskill the 8 validated employees to support roles, and build a longer-term capability pipeline. Your timeline is realistic, and the project succeeds.

Use Case 3: Reskilling Programs That Actually Work

Before: You launch a data analytics reskilling program for 200 employees. Post-training surveys show high satisfaction. Six months later, analytics usage hasn't increased, and hiring managers still complain about capability gaps.

After: The dashboard shows:

- Pre-training: 200 employees, 15% with validated analytics skills

- Post-training: 200 employees completed, but only 40% passed validation assessments

- 3 months later: Only 25% are applying analytics skills in their work (freshness score tracked)

- 6 months later: Proficiency levels among active users have increased, but coverage remains low

You diagnose the issue: the training was too advanced for most learners' baseline, and there wasn't enough hands-on application baked into workflows. You redesign the program with prerequisites, more practical exercises, and manager-led application projects. The next cohort shows 70% validation rates and 60% sustained application.

You've transformed L&D from a cost center into a measurable capability engine.

Use Case 4: Hiring Decisions Anchored in Reality

Before: You're hiring for a critical role. You want to prioritize internal candidates to build morale and retention. But you're not sure if any internal candidates are truly qualified, so you hedge by opening external searches simultaneously. The process drags on, and internal candidates feel overlooked.

After: The dashboard shows:

- 3 internal candidates with relevant skills

- Candidate A: High validation scores, recent application, clear proficiency trajectory

- Candidate B: Mixed validation, some gaps but strong foundation

- Candidate C: Mostly self-declared, low confidence data

You fast-track Candidate A with confidence, offer targeted upskilling to Candidate B as a backup, and focus your external search only if needed. The internal candidate succeeds, morale lifts, and you've saved 3 months of recruiting time.

Building a Culture of Skills Confidence

The Skills Validation Dashboard doesn't just provide data-it creates behavioral change.

When employees see that validated skills unlock opportunities (mobility, projects, development resources), they become invested in validation. It shifts from an HR compliance activity to a career accelerator.

When managers see that validated team capability helps them staff projects confidently and hit goals, they champion skills assessments instead of viewing them as administrative burden.

When leaders see that skills intelligence enables faster, better talent decisions, they invest in building validation infrastructure instead of tolerating skills illusion.

The dashboard doesn't just measure confidence-it builds it.

The Shift: From Counting Skills to Understanding Capability

There's a fundamental difference between:

Skills Data: "We have 500 people with Python listed"

Skills Intelligence: "We have 47 people with recently validated, job-applied Python proficiency at intermediate or higher levels, concentrated in Engineering and Data teams, with freshness scores averaging 8.5/10"

The first statement is a number. The second is insight you can act on.

That's the shift iMocha's Skill Validation Snapshot & Coverage Dashboard enables-from counting to understanding, from data to confidence, from illusion to intelligence.

Your Workforce Deserves Better Than Guesswork

The stakes are too high, and the pace of change too fast, to base talent decisions on unreliable data.

Your people deserve a system that recognizes and validates their real capabilities-not one that relies on self-declared profiles that may or may not reflect reality.

Your business deserves workforce intelligence that enables confident strategy execution-not guesswork that leads to costly missteps.

Your talent leaders deserve tools that create clarity and confidence-not dashboards that look impressive but hide fundamental data quality problems.

The Skill Validation Snapshot & Coverage Dashboard is that tool. Not another data view. A lens of truth that transforms skills illusion into skills intelligence.

Because in a world where every organization is trying to become skills-first, the winners won't be those with the most skills data.

They'll be the ones who know which skills data to trust.

iMocha's Skills Intelligence Platform helps organizations move from skills data to skills confidence. Our Skill Validation Snapshot & Coverage Dashboard provides the transparency, freshness tracking, and validation rigor that transforms talent decisions from educated guesses into strategic advantages. Because your workforce strategy deserves better than illusion-it deserves intelligence.

.avif)

.webp)

.webp)